Its the elephant in the room. We know IPv6 is around the corner. (Some would say say its three corners back and we have just been ignoring it.) However, within the UK, GridPP is making progress.

More and more sites are offering fully dual stacked storage systems. Indeed some sites are already fully dual-stacked.

Other sites are slowly working in IPv6 components into current systems.

As a group we are looking at new gateway technologies to span IPV4 back ends to have have IPV4/6 front ends. Here I am thinking of the work RALPP site has been working with in collaboration with CMS for xrootd Proxy Caching.

Even the FTS middleware deployed at the UK Tier1 is just about to be deployed dual-stacked. It is an interesting time for IPV6 within the UK and not in the Chinese proverbial sense.

These are just some of the storage related highlights/current activities for IPV6 integration. I leave it as an exercise of the reader regarding other grid components.

07 November 2017

10 October 2017

What has IT ever done for physics?

Yesterweek was IT expo in London; audience was very much data centre, IT types, and IT security types, techies and CEOs, from big established companies to startups.

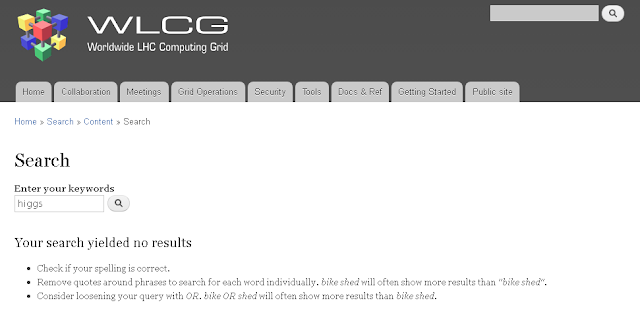

Opening keynote was given by Professor Brian Cox whose talk was titled "Where IT and physics collide." The word "collision" suggests WLCG? or at least something HEPpy, maybe focusing on the data management, the compute that "discovered the Higgs,"

- or, with this being also an IT security conference, the security behind WLCG.

Fortunately, he gave a talk about physics. He talked about LIGO, SDSS, colliding black holes, and, because the organisers asked him to, quantum computing. It was a very engaging talk and everyone enjoyed it. But he did not talk about the IT. Without the computation, we would not know what colliding black holes look like.

And without the WLCG computing infrastructure, we would not have found the Higgs boson.

Prof Cox's friend, Prof Jon Butterworth, who is in ATLAS, does mention the computing in his very enjoyable book "Smashing Physics", but only briefly - it's about a page, plus a few remarks about the other stuff it has done. Which is right, they are both physicists and should talk about the exciting physics that is coming from the LHC and from the other gizmos. But big science needs big compute (and, er, big data.) As we know this sort of stuff doesn't just set itself up or run itself, it requires significant investment and effort. And you can't just "get it in the cloud," not on this scale, and even if you could, it would still require investment and effort.

Incidentally, one of the other things they talked about at the conference: some people are trying to engineer a car that will go 1000 mph. It also needs a lot of computer simulation to design it. But where will it drive - not on British roads...

Opening keynote was given by Professor Brian Cox whose talk was titled "Where IT and physics collide." The word "collision" suggests WLCG? or at least something HEPpy, maybe focusing on the data management, the compute that "discovered the Higgs,"

- or, with this being also an IT security conference, the security behind WLCG.

Fortunately, he gave a talk about physics. He talked about LIGO, SDSS, colliding black holes, and, because the organisers asked him to, quantum computing. It was a very engaging talk and everyone enjoyed it. But he did not talk about the IT. Without the computation, we would not know what colliding black holes look like.

And without the WLCG computing infrastructure, we would not have found the Higgs boson.

Prof Cox's friend, Prof Jon Butterworth, who is in ATLAS, does mention the computing in his very enjoyable book "Smashing Physics", but only briefly - it's about a page, plus a few remarks about the other stuff it has done. Which is right, they are both physicists and should talk about the exciting physics that is coming from the LHC and from the other gizmos. But big science needs big compute (and, er, big data.) As we know this sort of stuff doesn't just set itself up or run itself, it requires significant investment and effort. And you can't just "get it in the cloud," not on this scale, and even if you could, it would still require investment and effort.

Incidentally, one of the other things they talked about at the conference: some people are trying to engineer a car that will go 1000 mph. It also needs a lot of computer simulation to design it. But where will it drive - not on British roads...

23 August 2017

Clouds have buckets but also need baskets

Sometimes supposedly simple things can be slightly tricky. This story relates to a data sharing exercise for the cloud workshop last year.

Alice wants to share 150 GB file with Bob, too large to send by email, too large even to share through "free" cloud storage or to conveniently put on a memory stick and send by mail..

Ordinarily she'd get a piece of online storage somewhere, upload the file to it, and send Bob a link. But let's suppose we do it the other way: Bob gets some temporary storage and gives Alice access rights to it, Alice then uploads her file to that space and Bob retrieves it and gets rid of the space. Easy, yes?

If they were both scientists with grid access, it'd be fairly easy to find a piece of space and share files, but let's suppose Alice can't use the grid, or they are not in the same VO, or they want to share privately.

This sounds like a use case for the cloud, then? so Bob gets a piece of cloud storage, a bucket. There's a blob store with keys that can be shared, but it requires a client to talk to it, or there might be a browser plugin, but Bob doesn't want to ask Alice to install anything, she should just be able to use her browser. OK, but how about a publicly writable space (with a non-guessable name) - nope, there's nothing that can be made world writeable - probably a Good Thing™ in the Grand Scheme of Things but it makes Bob's life a bit more difficult. DropBox can create a shared space but Bob would have to "unlock" more space and he doesn't want to pay for a whole month, and he doesn't want a subscription that he'd have to remember to cancel..

OK, so Bob next looks at cloud compute, and gets a virtual machine in the cloud with enough disk space. He sets up Apache on it. While he fiddles with this, he reminds himself how iptables work, so he can protect the machine and test in a protected environment (and obviously he's careful not to block off his ssh connection!) He looks at DAV but it's too complicated. How about a normal upload? Bob reminds himself how the HTML works. Yes, that might work; except it doesn't - a quick search reveals it needs CGI, so Bob needs to find a CGI script - there are modules for Perl, PHP and Python but example scripts need a bit of tweaking to make them look right. Obviously he also needs to build and install the module dependencies that are not part of the standard operating system packages. Meanwhile, because it is a compute resource, Bob is also paying for its idle time, and it idles a bit because this exercise is not Bob's highest priority.

There is no certificate on the cloud host - so Bob has to use HTTP rather than HTTPS, but this is supposed to be a short exercise, probably OK, it's not sensitive data. However, it does need a bit of protection because it is a writeable resource, Bob thinks, so he reminds himself how BasicAuth works and sets up a temporary password that his friend will remember, and one for himself, to test, which he can remove once his testing is done (so he doesn't expose Alice's password during testing.).He gradually opens the virtual machine's firewall and is annoyed it doesn't work, until he remembers the cloud platform has its own firewall, too; it takes him half a minute to locate the network security rules and add port 80. He then tests from home, too, and finally mails the hostname, username, and password to Alice.

The point of this exercise was to see how Alice and Bob would share data when they don't have a shared data platform, and the recipient wishes to pay the (temporary) storage costs. It is related to our exercise in data sharing through the use of cloud resources. Shouldn't it have been easier than writing to a portable hard drive?

Alice wants to share 150 GB file with Bob, too large to send by email, too large even to share through "free" cloud storage or to conveniently put on a memory stick and send by mail..

|

| (Baskets, image credit Jeremy Kemp) |

Ordinarily she'd get a piece of online storage somewhere, upload the file to it, and send Bob a link. But let's suppose we do it the other way: Bob gets some temporary storage and gives Alice access rights to it, Alice then uploads her file to that space and Bob retrieves it and gets rid of the space. Easy, yes?

If they were both scientists with grid access, it'd be fairly easy to find a piece of space and share files, but let's suppose Alice can't use the grid, or they are not in the same VO, or they want to share privately.

This sounds like a use case for the cloud, then? so Bob gets a piece of cloud storage, a bucket. There's a blob store with keys that can be shared, but it requires a client to talk to it, or there might be a browser plugin, but Bob doesn't want to ask Alice to install anything, she should just be able to use her browser. OK, but how about a publicly writable space (with a non-guessable name) - nope, there's nothing that can be made world writeable - probably a Good Thing™ in the Grand Scheme of Things but it makes Bob's life a bit more difficult. DropBox can create a shared space but Bob would have to "unlock" more space and he doesn't want to pay for a whole month, and he doesn't want a subscription that he'd have to remember to cancel..

OK, so Bob next looks at cloud compute, and gets a virtual machine in the cloud with enough disk space. He sets up Apache on it. While he fiddles with this, he reminds himself how iptables work, so he can protect the machine and test in a protected environment (and obviously he's careful not to block off his ssh connection!) He looks at DAV but it's too complicated. How about a normal upload? Bob reminds himself how the HTML works. Yes, that might work; except it doesn't - a quick search reveals it needs CGI, so Bob needs to find a CGI script - there are modules for Perl, PHP and Python but example scripts need a bit of tweaking to make them look right. Obviously he also needs to build and install the module dependencies that are not part of the standard operating system packages. Meanwhile, because it is a compute resource, Bob is also paying for its idle time, and it idles a bit because this exercise is not Bob's highest priority.

There is no certificate on the cloud host - so Bob has to use HTTP rather than HTTPS, but this is supposed to be a short exercise, probably OK, it's not sensitive data. However, it does need a bit of protection because it is a writeable resource, Bob thinks, so he reminds himself how BasicAuth works and sets up a temporary password that his friend will remember, and one for himself, to test, which he can remove once his testing is done (so he doesn't expose Alice's password during testing.).He gradually opens the virtual machine's firewall and is annoyed it doesn't work, until he remembers the cloud platform has its own firewall, too; it takes him half a minute to locate the network security rules and add port 80. He then tests from home, too, and finally mails the hostname, username, and password to Alice.

The point of this exercise was to see how Alice and Bob would share data when they don't have a shared data platform, and the recipient wishes to pay the (temporary) storage costs. It is related to our exercise in data sharing through the use of cloud resources. Shouldn't it have been easier than writing to a portable hard drive?

19 July 2017

All things data transfery for UK ATLAS for 3 months.

As part of ruminations into how UK Tier2 sites for WLCG are going to evolve their storage. I decided to compare and contrast historical network and file usage for UK WLCG sites for ATLAS VO for a three month period and relate this info to job completion and data deletion rates.

First we have file I/O associated with Analysis jobs at the sites:

One should note that not all sites run analysis jobs. So I created a similar table for Production Jobs:

I then thought would be interesting to take the totals form the above two tables and see how they relate to the number of Jobs on computer resources. ( I am pretending that thee is no access from non-local worker nodes.

Below is a comparison Total WAN and LAN data transfers rates with the Size of Storage Element with the total Volume of data which has been deleted from the SE (useful if you are interested in churn rates on your SE):

Caveat on all this info is that it is very much a measure of the VO usage at sites and not necessarily the capability of a site.

First we have file I/O associated with Analysis jobs at the sites:

One should note that not all sites run analysis jobs. So I created a similar table for Production Jobs:

Caveat on all this info is that it is very much a measure of the VO usage at sites and not necessarily the capability of a site.

Labels:

atlas,

data,

data transfer,

deletion,

job,

networking

26 June 2017

A Storage view from WLCG 2017

I knew the WLCG 2017 meeting at Manchester was going to be interesting when it starts with a heatwave! In addition to various non-storage topics we had good discussions regarding accounting, object stores and site evolution.

Of particular interest I think are the talks from the Tuesday morning sessions on midterm evolution of sites. Other topics covered included a talk on a distributed dCache setup, a xrootd federations setup (using DynaFed) and the OSG experience of caching using xrootd. The network rates from the Tuesday afternoon session VO network requirement talks are good to look at for sites to try and calculate their bandwidth requirements dependent on what type of site they intend to be.

My highlights from the Wednesday sessions were the informative talks regarding accounting for storage systems without SRMs.

The last day also contained an IPv6 Workshop where site admins not only had some theory and heard the expected timescale for deployment but also got a chance to deploy ipv6 versions of perfSONAR/CVMFS/Frontier/squid/xrootd. ( not much different than deploying an ipV4 but that should not be a much of a surprise.)

Link to theses talks are:

https://indico.cern.ch/event/609911/timetable/#20170621.detailed

https://indico.cern.ch/event/609911/timetable/#20170622.detailed

A benefit from the meeting being in Manchester was the multiple talks from Manchester based SKA project for their data, computing and networking requirements. Links can be found interspersed here:

https://indico.cern.ch/event/609911/timetable/

Also announced was the next pre-GDB on Storage in September:

https://indico.cern.ch/event/578974/

Of particular interest I think are the talks from the Tuesday morning sessions on midterm evolution of sites. Other topics covered included a talk on a distributed dCache setup, a xrootd federations setup (using DynaFed) and the OSG experience of caching using xrootd. The network rates from the Tuesday afternoon session VO network requirement talks are good to look at for sites to try and calculate their bandwidth requirements dependent on what type of site they intend to be.

My highlights from the Wednesday sessions were the informative talks regarding accounting for storage systems without SRMs.

The last day also contained an IPv6 Workshop where site admins not only had some theory and heard the expected timescale for deployment but also got a chance to deploy ipv6 versions of perfSONAR/CVMFS/Frontier/squid/xrootd. ( not much different than deploying an ipV4 but that should not be a much of a surprise.)

Link to theses talks are:

https://indico.cern.ch/event/609911/timetable/#20170621.detailed

https://indico.cern.ch/event/609911/timetable/#20170622.detailed

A benefit from the meeting being in Manchester was the multiple talks from Manchester based SKA project for their data, computing and networking requirements. Links can be found interspersed here:

https://indico.cern.ch/event/609911/timetable/

Also announced was the next pre-GDB on Storage in September:

https://indico.cern.ch/event/578974/

20 June 2017

A solid start for IPV6 accessile storage within the UK for WLCG expeiments.

IPV6 accessible storage is becoming a reality in production at WLCG sites within the UK.

We already have some sites which are fully dual IPV4/6. Some sites have full storage systems are dual hosted. others have partial sections of their storage systems dual hosted as part of a staged rollout of service . I am also aware of sites whoa actively looking at providing dual hosted gateways to their storage systems. Now just to work out how to monitor and differentiate network rates between IPV4 and IPV6 traffic. (This is using a very liberal usage of the word "just" since I understand that there is a complicated set of issues for further IPV6 deployment and its monitoring.)

We already have some sites which are fully dual IPV4/6. Some sites have full storage systems are dual hosted. others have partial sections of their storage systems dual hosted as part of a staged rollout of service . I am also aware of sites whoa actively looking at providing dual hosted gateways to their storage systems. Now just to work out how to monitor and differentiate network rates between IPV4 and IPV6 traffic. (This is using a very liberal usage of the word "just" since I understand that there is a complicated set of issues for further IPV6 deployment and its monitoring.)

Storage news from HEPSYSMAN

Good news is that I heard some good talks (and possibly gave one) at HEPSYSMAN meeting this week . (Just in time for the WLCG workshop next week.) WE started with a day regarding IPv6 (which other than increasing my knowledge of networking. These talks also highlighted the timescale for storage to be dual homed for WLCG, volume and monitoring of traffic over IPv6 (in Dave Kelsey talk ) and issues with 3rd party transfers (form talk by Tim Chown.)

During HEPSYSMAN proper , many good things from different sites were reported. Of interest is how sites should evolve. Their was also a very interesting talk on comparing RAID6 and ZFS. Slides from the workshop should be available from here:

https://indico.cern.ch/event/592622/

During HEPSYSMAN proper , many good things from different sites were reported. Of interest is how sites should evolve. Their was also a very interesting talk on comparing RAID6 and ZFS. Slides from the workshop should be available from here:

https://indico.cern.ch/event/592622/

19 June 2017

Hosting a large web-forum on ZFS (a case study)

Hosting a large web-forum on ZFS (a case study)

Over the course of last weekend I worked with a friend on deploying zfs across their infrastructure.Their infrastructure in this case is a popular website written in php and administering to some 20,000+ users. They, like many gridpp sysadmins use CentOS for their back-end infrastructure. However due to being a regularly high profile target for attacks they have opted to run their systems using the latest kernel installed from the elrepo.

The infrastructure for this website is heavily docker orientated due to the (re)deployment advantages that this offers.

Due to problems with the complex workflow selinux has been set to permissive.

Data for the site was stored within a /data directory which stored both the main database for the site and files which are hosted by the site.

Prior to the use of zfs the storage used for this site was xfs.

The hardware used to run this site is a dedicated 8 intel cores, 32Gb RAM, 2 * 2Tb disks managed by soft-raid(mirror) and partitioned using lvm.

Installing zfs

Initially setting up ZFS couldn't have been easier. Install the correct rpm repo, update, install zfs and reboot:yum update

yum install

yum update

yum install zfs

reboot

Fixing zfs-dkms

As they are using the latest stable kernel they opted to install zfs using dkms which has pros/cons to the kmod install.This unfortunately didn't work as it should have done (possibly due to a pending kernel update on reboot). After rebooting the following commands were needed to install the zfs driver:

dkms build spl/0.6.5.10

dkms build zfs/0.6.5.10

dkms install spl/0.6.5.10

dkms install zfs/0.6.5.10

This step triggered the rebuild and installation of the spl (solaris porting layer) and the zfs modules.

(Adding this to the initrd shouldn't be required but can probably be done as per usual once this has been build)

Migrating data to ZFS

The initial step was to migrate the storage backend and main database for the site. This storage is approximately 0.5Tb of data which was constructed of numerous files with an average file size close to 1Mb. The SQL database is approximately 50Gb in size containing most of the site data.mv /data/webroot /data/webroot-bak

mv /data/sqlroot /data/sqlroot-bak

zfs create webrootzfs vgs/webrootzfs

zfs create sqlrootzfs vgs/sqlrootzfs

zfs set mountpoint=/data/webroot webrootzfs

zfs set mountpoint=/data/sqlroot sqlrootzfs

zfs set compression=lz4 webrootzfs

zfs set compression=lz4 sqlrootzfs

zfs set primarycache=metadata sqlrootzfs

zfs set secondarycache=none webrootzfs

zfs set secondarycache=none sqlrootzfs

zfs set recordsize=16k sqlrootzfs # Matches the db block size

rsync -avP /data/webroot-bak/* /data/webroot/

rsync -avP /data/sqlroot-bak/* /data/sqlroot/

After migrating these the site was then brought back up for approximately 24hr and there were no performance problems observed.

The webroot data which contained mainly user submitted files reached a compression level of about 1.1.

The sql database reached a compression level of about 2.4.

Given the increased performance of the site due to this migration it was decided 24hr later to investigate migrating the main website itself rather than just the backend.

Setting up systemd

The following systemd services and targets were enabled but rebooting the system has not (yet) been tested.systemctl enable zfs.target

systemctl enable zfs-mount

systemctl start zfs-mount

systemctl enable zfs-import-cache

systemctl start zfs-import-cache

systemctl enable zfs-share

systemctl start zfs-share

Impact of using ZFS

A nice solution for this was found to already exist quite well. This is the zfs storage driver for docker.https://docs.docker.com/engine/userguide/storagedriver/zfs-driver/

After this was setup the site was brought back online and the performance was notable.

Page load time for the site dropped from about 600ms to 300ms. That is a 50% drop in page load time entirely due to replacing the backend storage with zfs.

This was with the ARC cache running with a 95% hit rate.

Problems Encountered

Unfortunately about 30min of running after of migrating the docker service to use ZFS the site fell over.(page load times increased to multiple seconds and the backend server load spiked.)

Upon initial inspection it was discovered that the zfs arc cache had dropped to 32M (almost absolute minimum) and the arc-reclaim process was consuming 100% of 1 CPU.

The ZFS arc cache maximum was increased to 10Gb but the cache refused to increase.

echo 10737418240 > /sys/module/zfs/parameters/zfs_arc_max

Increasing the minimum forced the arc cache to increase however the arc-reclaim process still was consuming 1 CPU core.

Fixing the Problems

A better workaround was found to be to disable thetransparent_hugepage using:echo never > /sys/kernel/mm/transparent_hugepage/enabled echo never > /sys/kernel/mm/transparent_hugepage/defrag

This stopped the arc-reclaim process from consuming 100% CPU as well as triggering the arc cache to start regrowing.

(For the interested this has been reported: https://github.com/zfsonlinux/zfs/issues/4869)

Summary of Tweaks made

A summary of some of the optimizations applied to these pools are:# ZFS settings

zfs set compression=lz4 webrootzfs # Enable best compression

zfs set compression=lz4 sqlrootzfs # Enable best compression

zfs set primarycache=all # This is default

zfs set primarycache=metadata sqlrootzfs # Don't store DB in cache

zfs set secondarycache=none webrootzfs # Not using l2arc

zfs set secondarycache=none sqlrootzfs # Not using l2arc

zfs set recordsize=16k sqlrootzfs # Matches the db block size

# Settings changed through /sys

echo never > /sys/kernel/mm/transparent_hugepage/enabled

echo never > /sys/kernel/mm/transparent_hugepage/defrag

echo 10737418240 > /sys/module/zfs/parameters/zfs_arc_max # 10Gb max

echo 4294967296 > /sys/module/zfs/parameters/zfs_arc_min # 4Gb min

# repeat the following for /sys/block/sda and /sys/block/sdb

echo 4096 > /sys/block/sda/queue/nr_requests

echo 0 > /sys/block/sda/queue/iosched/front_merges

echo noop > /sys/block/sda/queue/scheduler

echo 150 > /sys/block/sda/queue/iosched/read_expire

echo 1500 > /sys/block/sda/queue/iosched/write_expire

echo 4096 > /sys/block/sda/queue/nr_requests

echo 4096 > /sys/block/sda/queue/read_ahead_kb

echo 1 > /sys/block/sda/queue/iosched/fifo_batch

echo 16384 > /sys/block/sda/queue/max_sectors_kb

Additionally for the docker-zfs pool:

zfs set primarycache=all zpool-docker

zfs set secondarycache=none zpool-docker

zfs set compression=lz4 zpool-docker

All docker containers built using this engine inherit these properties from the base pool zpool-docker however, a remove/rebuild will be needed to take advantage of settings such as compression.

Labels:

configuration,

Data management,

outreach,

performance,

zfs,

zpool

12 April 2017

Beware the Kraken! What happens when you start plotting transfer rates.

FTS transfers are how the WLCG moves alot of its data. I decided decided to look at what the instantaneous rates within the transfers were.Lines in the log files appear as:

I decided to plot the value of the instantaneous rate with respect to how often this value appeared. Plotting this for 2/18 FTS servers at RAL for ~1month of transfers gives:

This has been described as a Kraken, the hand of Freddie Kruger, a sea anemone or a leafless tree's branches blowing in the wind . Please leave comments on your own suggestion!!

I also decided to look at the subset of data for FTS transfers to the new CEPH storage at the RAL Tier1 and saw this:

My first thought is that it is similar to the Cinderella castle by Disney.

https://www.pinterest.com/explore/disney-castle-silhouette/ :)

I decided to plot the value of the instantaneous rate with respect to how often this value appeared. Plotting this for 2/18 FTS servers at RAL for ~1month of transfers gives:

This has been described as a Kraken, the hand of Freddie Kruger, a sea anemone or a leafless tree's branches blowing in the wind . Please leave comments on your own suggestion!!

I also decided to look at the subset of data for FTS transfers to the new CEPH storage at the RAL Tier1 and saw this:

My first thought is that it is similar to the Cinderella castle by Disney.

https://www.pinterest.com/explore/disney-castle-silhouette/ :)

10 April 2017

First look at IPV4/6 Dual mesh perfSonar results for the RAL-LCG2 Tier1

We now have the RAL-LCG2 perfSONAR production hosts dual stacked. I though I would have a look at difference between IPv4 and IPv6 values for throughput rates and round trip times from traceroute measurements. I first decided to look at the bandwidth measurements between pairs of hosts, and got the following plot:

So I then decided to look at what we get if we compare the ratio of throughput measurements and compare it to the ratio of the round trip times of the traceroute measurements:

So I then decided to look at what we get if we compare the ratio of throughput measurements and compare it to the ratio of the round trip times of the traceroute measurements:

Subscribe to:

Posts (Atom)